Neural Style Transfer

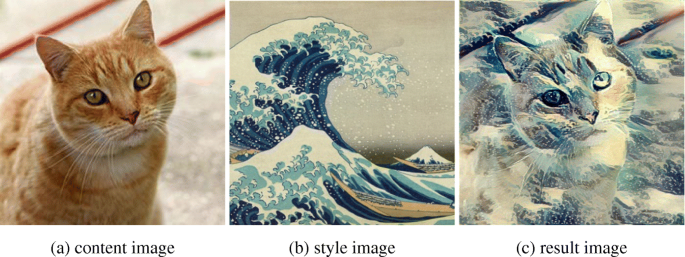

Merging a content image and a style image to produce art!

Neural Style Transfer (NST) is an interesting optimization technique in deep learning. It merges to images: a “content” image (C) and a “style” image (S), to create a “generated” image (G).

NST uses a previously trained convolutional network, and builds on top of that. This is called transfer learning. In this project, I will be using the VGG network from the original NST paper. Specifically, I will be using the VGG-19 network, a 19-layer version of the network. This model has been trained on the ImageNet dataset.

1. Style Cost Function

Firstly, to compute the “style” cost, we need to calculate the Gram matrix. This matrix gives us an idea of how similar two feature maps are. The code to compute the Gram matrix is given below. It is just the dot product of the input vectors. We are interested in similarity since we want the style of the Style image and the Generated image to the similar.

Next, once we have the Gram matrices calculated for the Style image and the Generated image, a squared difference can be taken with a normalization constant to obtain the Style loss for a particular layer.

Code

|

|

2. Content Cost Function

In the shallower layers of a CNN, the model learns low level features whereas in the deeper layers, the model learns complex features. Hence, in the generated image, the content should match that of the input content image. This can be achieved by taking activations from the middle of the CNN.

This cost can be computed by comparing the activations of the style image and the generated image.

Code

|

|

3. Total Cost

The total cost function is just a weighted sum of the Style Cost and the Content Cost.

Code

|

|

4. Optimization Loop

The various steps for solving the optimization problem are:

- Load the content image

- Load the style image

- Randomly initialize the image to be generated

- Load the VGG19 model

- Compute the content cost

- Compute the style cost

- Compute the total cost

- Define the optimizer and learning rate

The training loop looks like:

Code

|

|