Facial Emotion Recognition

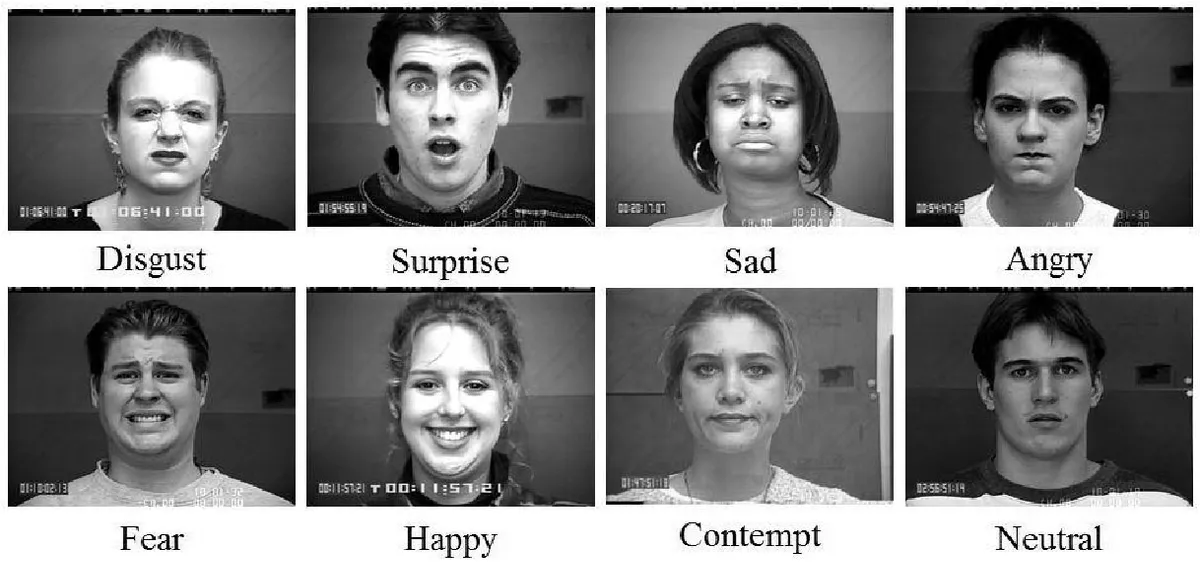

Classifying images of human faces into one of seven basic emotions.

The goal of this project was to classify images of human faces into one of seven basic emotions. To do so, I used Convolutional Neural Networks as the advantage it had over its precedessors is that it can detect important features without human intervention. CNNs are computationally efficient as well due to the special convolution and pooling operations.

1. The Dataset

The FER-2013 dataset consists of 35,887 images, each of size 48x48 pixels. The data was split into 28,709 images for training and 7,178 images for testing.

2. The Model

A ResNet50 architecture was used as the Convolutional Neural Network which was pretrained on the ImageNet dataset. This dataset has 1000 classes but for the case of facial emotion recognition, there are only 7. Hence, to fine tune the network, the shallower layers were frozen and the model was trained using the training set. The building of the fine-tuned network is shown below:

Code

|

|

3. Training and Performance

The final layer of the base pretrained ResNet50 was replaced with a 7 unit softmax activated layer. This model was trained for 20 epochs and it obtained an accuracy of 85.2%. To improve the performance on the FER dataset, freezing of some of the layers was done and retrained for 20 epochs. The accuracy increased from 85.2% to 86.3%.

|

|